Notes from a few sessions at this one-day workshop held at the UCL AI Centre, Holborn on 13 March 2023.

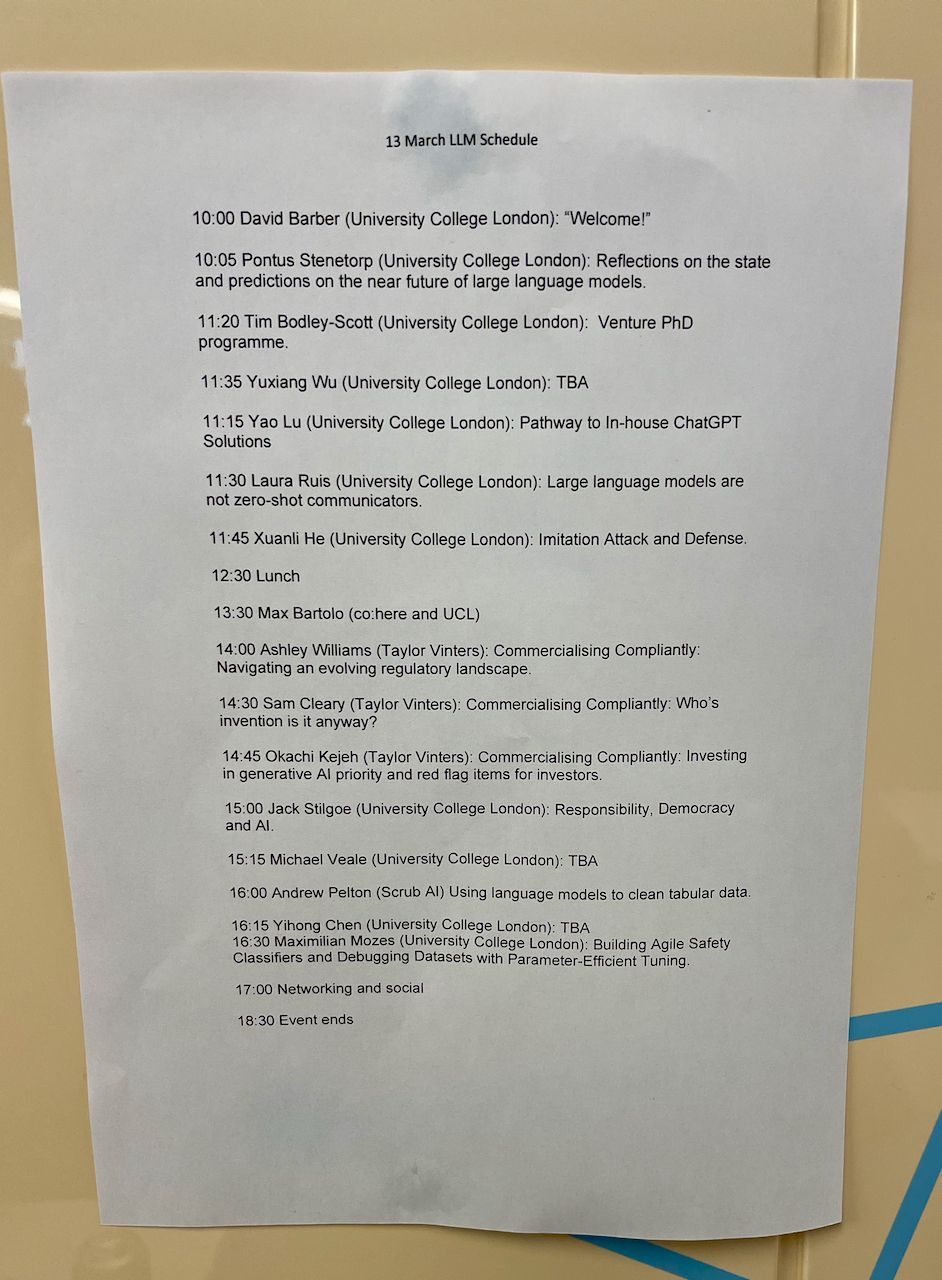

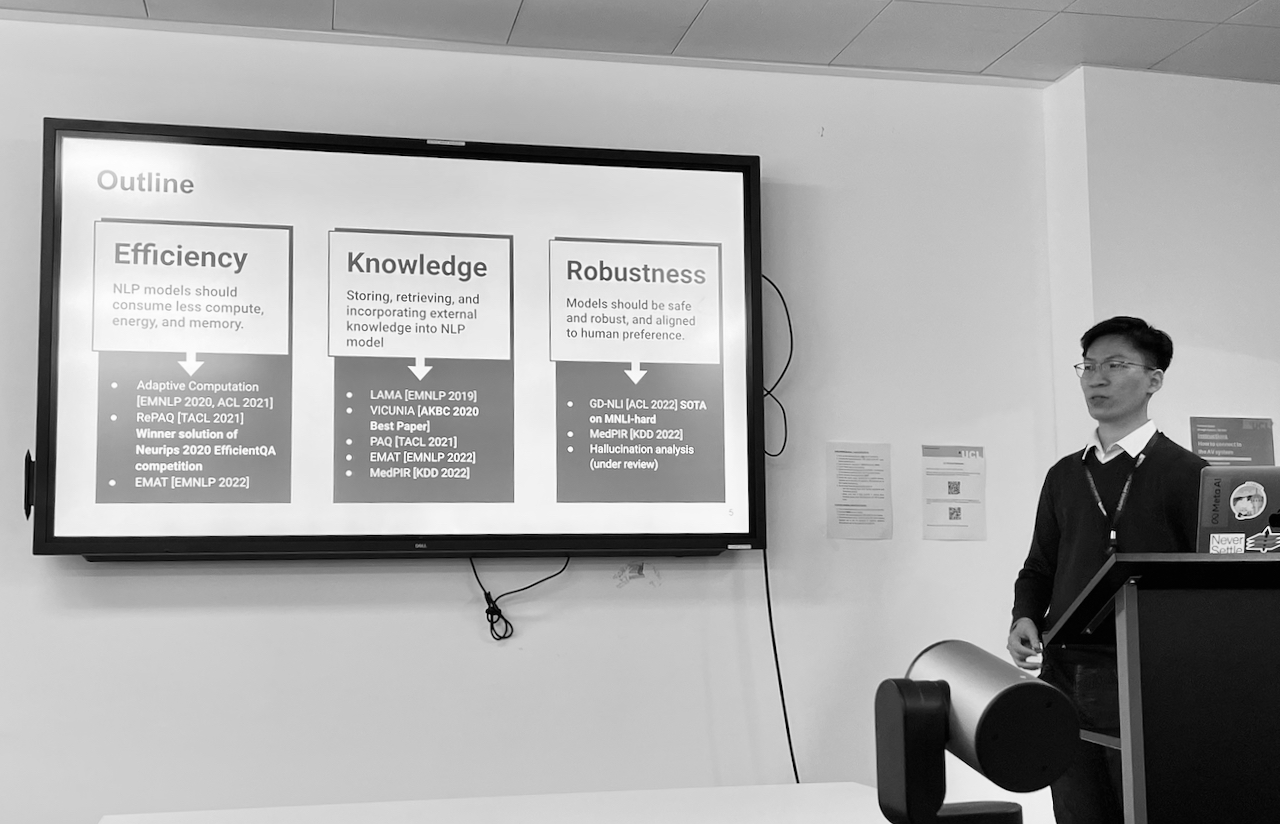

Towards efficient, knowledgeable and robust language models

The first session I caught was Yuxiang Wu, and it set the scene for the whole day. There’s an interplay between the efficiency of large language models, their access to knowledge, and how robust or truthful they are. So although there are many threads at each of those three areas, improving access to external knowledge increases accuracy and potentially lets you use smaller models.

Pathway to in-house ChatGPT solutions

Yao Lu’s presentation was very timely. The example he gave was using a GPT-2 model to write emails, and improving it via fine tuning with a small number of examples. I was wondering how realistic something like this was, and how much data you need. Not so much! As was shown the following week in the Alpaca release.

Safety and alignment

This presentation from Aligned AI — I’m still not sure exactly what they do, but the alignment problem in AI is about ensuring systems are in step with our goals. For example, it looks like Preamble (for example) let you take a model, and pick your ethics!

Law

(Sorry, not the clearest photo, but it’s here to aid my memory more than anything else.)

(Sorry, not the clearest photo, but it’s here to aid my memory more than anything else.)

A trio of talks on regulation from Taylor Vinters, a law firm. I’ve attended a few Taylor Vinters events via the NVIDIA Inception programme, so was familiar with much of this (I should write those notes up, at some point). But I didn’t know about the National Security and Investment Act 2021, which Okachi Kejeh presented. She pointed out a very broad definition of AI usage, and the mandatory notification rules for investments into companies.

Summary

A day well spent, and encouraging to see that smaller models have their place, and there’s fundamental research going on to improve model behaviour.